This is an occasional series in which I cover a small aspect of a book that currently has my attention. It's not intended as a review, but perhaps a morsel that might get people to read some of the great books out there right now.

Yesterday, I mentioned the corners of projects that collected poor code and lost docs like the underside of skirted couches gather dust bunnies and petrified Pop Tarts (also, baby bottles, one of which I once found when my son was four. Ewww...).

I had always thought that more control was the answer. We just had to read our tickets more. We needed checklists. We needed all sorts of tag-ups. We needed more managers and leads to poke us with sticks when we didn't read up, show up, and get up and go.

It was when i was considering how to get shock collars past our OSHA guy that I realized I may be approaching a line.

Peopleware, by Tom DeMarco, happily, had a solution that didn't require purchases that might be embarrassing should they end up on USA Today. The author recommends bringing back the chaos.

Chaos is not a bad thing. You just have to understand what Chaos is buying you.

Ooh, shiny!

Doing something new and novel excites the mind. When the brain sees something new, it really lights up. All sorts of centers talk to all sorts of other centers trying to figure out what is going on. If you have a bunch of developers who like this kind of stimulation, giving them a novel way to interact or organize can get them fired up a bit.

Tabula Rasa

We get crusty when we get used to a process. What a group needs sometimes is something new and fresh: a blank slate. There's nothing more depressing than using the same stained and tarnished wiki full of ideas half written down and efforts only partially started.

Cobwebs

Every project organization system has a different focus. Some focus on having, come hell or high water, a full test suite. Some focus on completeness of fulfilling the objectives. Some focus on organic growth of what the product is intended to do. Some focus on the user experience.

Look at where you keep slipping up, and switch to a system that works for your group.

The Dark Corners of the Soul

Sometimes, we don't just forget something. We ignore it. We call our users stupid for not being able to learn a simple admin interface, or eschew tests as a waste of time.

We are sometimes bad people.

Switching up allows us to take those dark corners of our souls where we have stored up all our snark and distaste and brighten them up. Maybe we'll learn that tests don't take up all that much time, or that maybe our users aren't so stupid (we're just terrible designers).

So how can you introduce Chaos?

Switch up the system

Test driven development. Scrum. Agile. Interface driven development. Hell, even Waterfall (useful if you hate your developers!). Switch it up a bit. Which system is going to help your group the most? Which have you never tried, but been curious about?

Start somewhere new

Do requests always get filtered through development first? Why not start with design? Or your interface expert? Give another group a chance to take the lead, and another group the chance to see what it's like to get requirements not hand-tailored for them.

Communication shifts

For one release, switch out face to face meetings with IrC. Or meet somewhere new. Try a new ticketing system. It's more than just getting a new wiki: it's about having something shiny and cool to play with when it comes time to work.

Chaos isn't something to be afraid of. Most of the world is made of chaos, and we seem to get along okay. As long as your group can harness it's power, rather than letting it run you, you can go a lot further than riding the back of entropy and 'proven methods.'

Friday, May 29, 2009

Wednesday, May 27, 2009

How do I track requirements?

One of the questions I get via Twitter and comments is how do I track requirements. When I came into a job that had gathering requirements as a part of its duties, I got the duties without any sort of best practices (one of the quirks about coming onto a contract that was still dewy faced). People gathered them how they felt it suited them and the customer, giving me a lot of leeway as to how I felt I should do it.

I had a lot of false starts, but we've come to something that works for the document wizards, developers, and customers.

But Framework X does that out of the box!

The first method was not to gather requirements at all, because the developers had found the magic bullet we needed. All I had to do was write up what the current framework did.

Oh my god. Do NOT fall for this.

Unless the requirement is stupidly simple, you will almost always have to do customization. Actually, if the requirement is stupidly simple, it's guaranteed that one thing will always pop up:

You haven't gathered all the requirements.

So many times we'd set up some naked framework, skin it with something we downloaded and, if we were feeling artsy, blinged out with a new header image or something, and hand it over to the customer. And the customer would poke at it. Best case, we'd hear back from them that it wasn't quite what they were looking for. Worst case?

We'd never hear from them again.

Quiet customers are not always a good sign. We'd check back after a few weeks, only to see our wunderprodukt laying fallow, collecting dust and not even generating a system log anymore.

So, even if we have the perfect solution, I make sure to gather requirements. I show the customer the framework and sit with them while we poke at it and look at everything it does... and keep an ear out for what it doesn't do.

Tickets to requirements

All requirements must be in a ticket. Every last one of them. If it's something that's going to generate code, text, graphics, CSS, or even just some thinking, it goes into a ticket. Keeping requirements in a document is a joke. The document is useful for keeping a nice concise record of the requirements somewhere. It's like a tar file. You don't work off the tar file. You keep it as an archive.

One of the keys parts of this is limiting the number of requirements/tickets. The poor dev/tech lead brain can only retain so much at once (too many movie quotes and such rattling around in there).

Body language

There's a danger to keeping everything in tickets, however.

Comments.

I appreciate the need for comments, but sometimes, I hate the little buggers. They're a fetid breeding ground for cross-communication, requirement creep, and pure paradoxes (The cat must be both dead and alive at the same time. You can do that in Python, right? If not, we should see if the Java guys can do it!)

For that reason, I laid down the law that if you really, really love that new idea of yours, it had better go in the body of the ticket. Comments are kind of like fireflies in a jar. Nice to look at for a while, but they're going to die pretty quick.

User stories and interactions

I love interactions. I really do. An interaction might go like this:

Jane goes to her widget maker and logs in. She is taken to the home page. There, she clicks on "Make a new widget"

A box pops up, allowing her to enter in all the details of the widget. She notices that the "Make my widget" button is inactive. Once she has filled in all the required fields for her widget, the button becomes active. She clicks it.

The box closes, and her widget is now featured as a line of text under "My widgets".

I have a two rules about interactions:

If I don't say the user goes to a new page, they don't.

If I don't say a user has to click to get to a new page, they don't.

Sometimes it takes running through a few to realize how many functions have to be exposed, and coming up with a sensible to expose functionality without drowning the user in them.

A nice thing about interactions is that more than one person can use them. Developers can use them to see what needs to be put where. Designers can use them to condense and organize functionality (and then make it awfully pretty). Even customers can read them to make sure that we're still in line with their vision.

Two week dashes/scrums

Sometimes a project hands us more requirements than our poor heads can handle at once, but isn't handing us a ton of time. In that case, I usually break up requirements into small, two week chunks. One scrum, we might focus on a certain user type's interactions. Another, ACL. Another, authentication. One of the last ones, implementing design.

The two week scrums help us get alarm bells early that something isn't going right, and help focus our resources on one problem. There's always going to be one guy that is more into authentication than stupid templates, and one that wants to do cool CSS rather than sigh endlessly over optimization. If one resource is hopelessly bored, we can always release him for another project until something cool comes up again.

At the end of the two weeks, we can look at what was done, what wasn't done, and determine if we need a second scrum, or if those hanging reqs are looking a bit silly now and should just be canned.

None of the above leads to a perfect system, which is why I tend to switch around from project to project. If you get too used to where you can sweep the crumbs, you end up with pockets of dust bunnies and petrified Pop Tarts that can lead to a distressed customer, a stressed out PM, and some sad-faced devs looking at their weekend disappearing.

I had a lot of false starts, but we've come to something that works for the document wizards, developers, and customers.

But Framework X does that out of the box!

The first method was not to gather requirements at all, because the developers had found the magic bullet we needed. All I had to do was write up what the current framework did.

Oh my god. Do NOT fall for this.

Unless the requirement is stupidly simple, you will almost always have to do customization. Actually, if the requirement is stupidly simple, it's guaranteed that one thing will always pop up:

You haven't gathered all the requirements.

So many times we'd set up some naked framework, skin it with something we downloaded and, if we were feeling artsy, blinged out with a new header image or something, and hand it over to the customer. And the customer would poke at it. Best case, we'd hear back from them that it wasn't quite what they were looking for. Worst case?

We'd never hear from them again.

Quiet customers are not always a good sign. We'd check back after a few weeks, only to see our wunderprodukt laying fallow, collecting dust and not even generating a system log anymore.

So, even if we have the perfect solution, I make sure to gather requirements. I show the customer the framework and sit with them while we poke at it and look at everything it does... and keep an ear out for what it doesn't do.

Tickets to requirements

All requirements must be in a ticket. Every last one of them. If it's something that's going to generate code, text, graphics, CSS, or even just some thinking, it goes into a ticket. Keeping requirements in a document is a joke. The document is useful for keeping a nice concise record of the requirements somewhere. It's like a tar file. You don't work off the tar file. You keep it as an archive.

One of the keys parts of this is limiting the number of requirements/tickets. The poor dev/tech lead brain can only retain so much at once (too many movie quotes and such rattling around in there).

Body language

There's a danger to keeping everything in tickets, however.

Comments.

I appreciate the need for comments, but sometimes, I hate the little buggers. They're a fetid breeding ground for cross-communication, requirement creep, and pure paradoxes (The cat must be both dead and alive at the same time. You can do that in Python, right? If not, we should see if the Java guys can do it!)

For that reason, I laid down the law that if you really, really love that new idea of yours, it had better go in the body of the ticket. Comments are kind of like fireflies in a jar. Nice to look at for a while, but they're going to die pretty quick.

User stories and interactions

I love interactions. I really do. An interaction might go like this:

Jane goes to her widget maker and logs in. She is taken to the home page. There, she clicks on "Make a new widget"

A box pops up, allowing her to enter in all the details of the widget. She notices that the "Make my widget" button is inactive. Once she has filled in all the required fields for her widget, the button becomes active. She clicks it.

The box closes, and her widget is now featured as a line of text under "My widgets".

I have a two rules about interactions:

If I don't say the user goes to a new page, they don't.

If I don't say a user has to click to get to a new page, they don't.

Sometimes it takes running through a few to realize how many functions have to be exposed, and coming up with a sensible to expose functionality without drowning the user in them.

A nice thing about interactions is that more than one person can use them. Developers can use them to see what needs to be put where. Designers can use them to condense and organize functionality (and then make it awfully pretty). Even customers can read them to make sure that we're still in line with their vision.

Two week dashes/scrums

Sometimes a project hands us more requirements than our poor heads can handle at once, but isn't handing us a ton of time. In that case, I usually break up requirements into small, two week chunks. One scrum, we might focus on a certain user type's interactions. Another, ACL. Another, authentication. One of the last ones, implementing design.

The two week scrums help us get alarm bells early that something isn't going right, and help focus our resources on one problem. There's always going to be one guy that is more into authentication than stupid templates, and one that wants to do cool CSS rather than sigh endlessly over optimization. If one resource is hopelessly bored, we can always release him for another project until something cool comes up again.

At the end of the two weeks, we can look at what was done, what wasn't done, and determine if we need a second scrum, or if those hanging reqs are looking a bit silly now and should just be canned.

None of the above leads to a perfect system, which is why I tend to switch around from project to project. If you get too used to where you can sweep the crumbs, you end up with pockets of dust bunnies and petrified Pop Tarts that can lead to a distressed customer, a stressed out PM, and some sad-faced devs looking at their weekend disappearing.

Friday, May 22, 2009

UI's - Full Circles

Note: I'm utterly swamped, so doing a repost of an article I wrote a while back that I've always been fond of. Here's to hoping next week is calmer!

In the beginning, there was the command line, and we were grateful for it, darn it. A single blinking underscore in orange or amber or green (depending one what strange theory was vogue about eyestrain at the time) was all we needed to get our computers to do what we needed them to do.

My first foray into computers was during this time. I cut my teeth on the Commodore 64, a beige box filled with strange churning sounds that allowed me to program and play games made simply of words and my own mad stabs at the game's internal dictionary. A few of my games had images, but nothing that could be called an interface, per se. They were usually badly pixilated depictions of trolls that were trying to crack open my skull before I saved the maiden fair.

Then came the GUI. Graphical user interfaces (GUIs) first appeared for the general public in the Apple ]['s. Instead of having to remember commands like CD (change directory), LS (list all files), or PS -A (list all processes), you found it in the interface. Instead of CD MYDOCU~1, you clicked on "My Documents". Instead of LS -v, you simply looked at the contents of the folder, displayed in a handy box. PS -A? They had a handy tool for that. The command line as a common tool was dead.

Where GUI's removed obfuscated commands from the user experience, they ushered in a way that was easier for the average person to understand. People outside of the usual circle of technophiles started to use computers. Businesses brought them in, as it no longer took a separate degree to be trained on then. The machine, created by us, had started to change us, how we did work, how we thought about computers.

GUI's were not without problems, though. Problems usually arise when engineers have created the interface. The melt-down at Three Mile Island was the result of an engineer doing this. Two dials that had to be monitored simultaneously were placed on opposite ends of the room. If there were two technicians on hand, they had to yell out the readings to each other. If only one was on duty, he or she had to run back and forth from one dial to another. To an engineer, where the dials were placed made sense. It simply didn't make sense once you added the human element.

While a faulty interface in a computer program never lead to nuclear melt-down, it can lead to endless frustrations to even the most technical of users. In the early days of graphical programs, standards hadn't been settled upon by the various competitors, with good reason. Each hoped to get the customer so used to their style, that they would never make the switch. A famous rivalry was that between Microsoft and Corel. Both produced a set of expensive office tools used for document and spreadsheet creation. The core features were nearly identical. In other arenas, the battle for dominance might have taken place in advertising, with clever slogans and campaigns to keep users hooked. With Microsoft and Corel, however, the battles took place in the UI. They moved around common elements, such as word formatting and options, just enough to make the switch daunting to the average user.

Once the initial rush to claim users was over, standards began to evolve. We based icons and organization off of things in our everyday life: folders to hold things, magnifying glasses to look for things, images of disks to indicate saving things. As time passed, users and developers began to agree on standard icons and terminology. An image of a disk was used to indicate the ability to save what you were working on, not to open a saved item.

Having an interface, though, started to change the physical appearance of the computer. Monitors, in the days of amber screens, didn't have to be high resolution, or even very large. A nine-inch monitor wasn't unheard of. Color was rarely necessary, and when it existed, it was usually only in the most basic of primary and secondary tones. An interface done only in blocky ROY G. BIV is painful to use, so higher resolution monitors started to become the standard. In order to render these higher resolutions, better processors became necessary.

Not only were existing elements added to, but new pieces of hardware were added. The mouse became absolutely vital to navigating a visual interface. Where before you found your way in a file system semantically, by file paths, you did it visually, by remember what you put where. Instead of keystrokes, you had clicks.

A funny thing happened with adding the mouse. People started complaining that their wrist hurt. The first reactions, in the mid- to late-eighties were derisive. "Mouse arm" became a running joke around many agencies. To those that had developed it, it wasn't nearly so funny. We were used to the idea that sitting in a chair for eight hours straight might hurt our backs that evening, but it had never occurred to us that using something might actually damage us. Ergonomics was beginning to enter the scene. Gel pads found their way onto desks, mouses were made to fit the hand better, and studies were conducted to see what the optimal positions were to make sure we weren't hurting our backs, hands, or eyes. As for those who had already developed what came to be known as carpal tunnel syndrome, many resorted to braces around their wrists. When that didn't help, they often had to resort to surgery.

In the past ten years, a curious thing has been happening with interfaces. The humans using them are beginning to push back. First, engineers thought they knew best how to display data. Then it was human factors psychologists. What became clear, however, was that the user wanted to be able to define their own experience. Applications with a static tool bar began to lose favor, as those that gave the user the most choice in where elements might be displayed, and which ones they wanted to toss totally.

The first time I saw a custom interface was when I was introduced to the Plus! package from Microsoft. It seemed a cute way of customizing the way your desktop looked, linking background, icon sets, and color schemes. As I looked around for new themes to install, I found the usual gambit of cartoon characters and puppies, but I also found something interesting: themes based on working. One used a background where you could sort files and folders into color-coded categories. File types were color-coded blobs that were easy to find in a visual scan.

As the years passed, I noticed more products coming out that allowed a user to customize their experience. Products like Confabulator and Google Desktop not only allowed a user to change how their desktop looked, but what was displayed there. Little portlets could display the weather, headlines from a news source, or the latest entries from a blog.

Up to this point, customization seemed limited to serious applications, like word processors and spreadsheet managers. A few less-serious areas had grabbed onto customization technology, like RSS feeds and blogs, but things like games remained locked into whatever a designer had decided back in development. This all changed with a game called World of Warcraft.

World of Warcraft is a massive online game (MMO), where people level up avatars by killing rats, then ogres, then demi-gods (with a few steps in-between, naturally). It wasn't the first to do this. Earlier games, such as Everquest, Ultima Online, and Dark Age of Camelot worked along the same lines, and each had a reasonable player base. Warcraft came out, and sales sky-rocketed. People not only bought it, but played it, and kept playing it.

My husband had to talk fast to get me to play another MMO. I'd left the last one in disgust, and swore never to play another one again. He assured me that Warcraft would be different. After installing it, he went to work on my interface. Blizzard, Warcraft's creator, had opened up a tool-set to allow users to create custom interfaces for their game. Users then turned around and posted them. I was able to install products that allowed me to see information that I wanted, how I wanted it. I was a damage-dealer, so I wanted data on how hard I was hitting. I could get that in floating numbers as I hit something, then as a print-out after a fight was over. My husband wanted a map that he could mark up however he wanted, noting everything from where he found cool things, where neat views were, or where a group of us were meeting up.

While advertising and buzz got people to the store to buy the game, it didn't make them continue to play (paying a monthly fee all the while). The other games had content. They had dragons and neat gear to wear. What they didn't have was the ability for the user to have control over what they saw, and how they experienced the game.

One intriguing result of the add-ons was how they began to influence the game itself. As more dungeons were created, more encounters were not only made easier by the add-ons, but seemed to require it. One popular modification was Decursive. When a person in your group became cursed, certain classes have the ability to remove that curse. Before Decursive, this took constant scanning. With the mod installed, a box would pop up showing the affected character. Click the box, the right spell went off, curing him or her. After Decursive became popular, the dungeon designers at Blizzard started adding in creatures that, instead of sending out curses one at a time, would affect the entire group or raid. Curing them all would be impossible without Decusrive installed. The interface was now not only changing how the user interacted the game, but was changing how that game was further developed. Not only were the humans pushing back, but the machine was responding.

It has taken time for designers and engineers to let go of the idea that they know what the users need most. As our capabilities grew in designing interfaces, studies grew, trying to discern how to capture the attention of the most users. Were animations helpful, or harmful? What colors were best for indicating something was a link? What part of the page is best for getting someone's attention? How can we affect how much a user comes away with? Any time a study tried to answer one of the above answers, the researchers usually came away with an option that was strong, but certainly didn't cover the entire subject pool they had studied.

The recent release of Google personalized web page works off the basis that one answer will not suit everyone. Previous portals, such as Yahoo's portal circa 1998, only allowed a set number of items to be shown, and all had to be on the same page. With Google's portal, users have the ultimate flexibility: they can choose content, placement, and even group items in ways that make sense to them. Users can even create their own custom portlets, then share them for others to use. In my office, most of my coworkers have Google's portal as their homepage, but everyone uses it differently. One groups different news sources on different pages. Another keeps track of blogs and local events. I have weather, comics, and a few news feeds to keep me current. When I was a user of Yahoo's portal, I knew of almost no other users. Now, everyone I know seems to use some variation of Google's homepage.

The cycle of us pushing technology is showing signs in one area: it's encouraging people to become more technical in order to get what they want. While most will never pick up a programming language, more people every year seem to know what an RSS feed is. For those that do know how to program, user communities are expanding for popular products, like bulletin board software or content management systems. Ten years ago, most were computer science graduates, or those that had been in the industry for years. Today, online guides and "Dummies" books let nearly anyone learn to code. Today, communities are made of professionals, but also those who only picked up a book when they wanted their board or CMS to do something, but couldn't find someone else who had done it already.

Indeed, in a few small ways, we're almost coming full circle. I was in one of my clients' offices a few weeks ago. He wasn't the most technical of customers. Though brilliant, he had trouble with his laptop on a daily basis. I was there to find out why syncing was taking him so long.

"Can you bring up your task monitor? Go to the Apple icon--"

He cut me off. "Oh! I found another way you can do that!" He opened up a command line terminal, then pecked out PS -A. He hit enter, and a list of his current processes popped up, complete with how much they were eating at his processor. "Isn't that clever?"

"Boy," I said, "Wait until I show you grep."

In the beginning, there was the command line, and we were grateful for it, darn it. A single blinking underscore in orange or amber or green (depending one what strange theory was vogue about eyestrain at the time) was all we needed to get our computers to do what we needed them to do.

My first foray into computers was during this time. I cut my teeth on the Commodore 64, a beige box filled with strange churning sounds that allowed me to program and play games made simply of words and my own mad stabs at the game's internal dictionary. A few of my games had images, but nothing that could be called an interface, per se. They were usually badly pixilated depictions of trolls that were trying to crack open my skull before I saved the maiden fair.

Then came the GUI. Graphical user interfaces (GUIs) first appeared for the general public in the Apple ]['s. Instead of having to remember commands like CD (change directory), LS (list all files), or PS -A (list all processes), you found it in the interface. Instead of CD MYDOCU~1, you clicked on "My Documents". Instead of LS -v, you simply looked at the contents of the folder, displayed in a handy box. PS -A? They had a handy tool for that. The command line as a common tool was dead.

Where GUI's removed obfuscated commands from the user experience, they ushered in a way that was easier for the average person to understand. People outside of the usual circle of technophiles started to use computers. Businesses brought them in, as it no longer took a separate degree to be trained on then. The machine, created by us, had started to change us, how we did work, how we thought about computers.

GUI's were not without problems, though. Problems usually arise when engineers have created the interface. The melt-down at Three Mile Island was the result of an engineer doing this. Two dials that had to be monitored simultaneously were placed on opposite ends of the room. If there were two technicians on hand, they had to yell out the readings to each other. If only one was on duty, he or she had to run back and forth from one dial to another. To an engineer, where the dials were placed made sense. It simply didn't make sense once you added the human element.

While a faulty interface in a computer program never lead to nuclear melt-down, it can lead to endless frustrations to even the most technical of users. In the early days of graphical programs, standards hadn't been settled upon by the various competitors, with good reason. Each hoped to get the customer so used to their style, that they would never make the switch. A famous rivalry was that between Microsoft and Corel. Both produced a set of expensive office tools used for document and spreadsheet creation. The core features were nearly identical. In other arenas, the battle for dominance might have taken place in advertising, with clever slogans and campaigns to keep users hooked. With Microsoft and Corel, however, the battles took place in the UI. They moved around common elements, such as word formatting and options, just enough to make the switch daunting to the average user.

Once the initial rush to claim users was over, standards began to evolve. We based icons and organization off of things in our everyday life: folders to hold things, magnifying glasses to look for things, images of disks to indicate saving things. As time passed, users and developers began to agree on standard icons and terminology. An image of a disk was used to indicate the ability to save what you were working on, not to open a saved item.

Having an interface, though, started to change the physical appearance of the computer. Monitors, in the days of amber screens, didn't have to be high resolution, or even very large. A nine-inch monitor wasn't unheard of. Color was rarely necessary, and when it existed, it was usually only in the most basic of primary and secondary tones. An interface done only in blocky ROY G. BIV is painful to use, so higher resolution monitors started to become the standard. In order to render these higher resolutions, better processors became necessary.

Not only were existing elements added to, but new pieces of hardware were added. The mouse became absolutely vital to navigating a visual interface. Where before you found your way in a file system semantically, by file paths, you did it visually, by remember what you put where. Instead of keystrokes, you had clicks.

A funny thing happened with adding the mouse. People started complaining that their wrist hurt. The first reactions, in the mid- to late-eighties were derisive. "Mouse arm" became a running joke around many agencies. To those that had developed it, it wasn't nearly so funny. We were used to the idea that sitting in a chair for eight hours straight might hurt our backs that evening, but it had never occurred to us that using something might actually damage us. Ergonomics was beginning to enter the scene. Gel pads found their way onto desks, mouses were made to fit the hand better, and studies were conducted to see what the optimal positions were to make sure we weren't hurting our backs, hands, or eyes. As for those who had already developed what came to be known as carpal tunnel syndrome, many resorted to braces around their wrists. When that didn't help, they often had to resort to surgery.

In the past ten years, a curious thing has been happening with interfaces. The humans using them are beginning to push back. First, engineers thought they knew best how to display data. Then it was human factors psychologists. What became clear, however, was that the user wanted to be able to define their own experience. Applications with a static tool bar began to lose favor, as those that gave the user the most choice in where elements might be displayed, and which ones they wanted to toss totally.

The first time I saw a custom interface was when I was introduced to the Plus! package from Microsoft. It seemed a cute way of customizing the way your desktop looked, linking background, icon sets, and color schemes. As I looked around for new themes to install, I found the usual gambit of cartoon characters and puppies, but I also found something interesting: themes based on working. One used a background where you could sort files and folders into color-coded categories. File types were color-coded blobs that were easy to find in a visual scan.

As the years passed, I noticed more products coming out that allowed a user to customize their experience. Products like Confabulator and Google Desktop not only allowed a user to change how their desktop looked, but what was displayed there. Little portlets could display the weather, headlines from a news source, or the latest entries from a blog.

Up to this point, customization seemed limited to serious applications, like word processors and spreadsheet managers. A few less-serious areas had grabbed onto customization technology, like RSS feeds and blogs, but things like games remained locked into whatever a designer had decided back in development. This all changed with a game called World of Warcraft.

World of Warcraft is a massive online game (MMO), where people level up avatars by killing rats, then ogres, then demi-gods (with a few steps in-between, naturally). It wasn't the first to do this. Earlier games, such as Everquest, Ultima Online, and Dark Age of Camelot worked along the same lines, and each had a reasonable player base. Warcraft came out, and sales sky-rocketed. People not only bought it, but played it, and kept playing it.

My husband had to talk fast to get me to play another MMO. I'd left the last one in disgust, and swore never to play another one again. He assured me that Warcraft would be different. After installing it, he went to work on my interface. Blizzard, Warcraft's creator, had opened up a tool-set to allow users to create custom interfaces for their game. Users then turned around and posted them. I was able to install products that allowed me to see information that I wanted, how I wanted it. I was a damage-dealer, so I wanted data on how hard I was hitting. I could get that in floating numbers as I hit something, then as a print-out after a fight was over. My husband wanted a map that he could mark up however he wanted, noting everything from where he found cool things, where neat views were, or where a group of us were meeting up.

While advertising and buzz got people to the store to buy the game, it didn't make them continue to play (paying a monthly fee all the while). The other games had content. They had dragons and neat gear to wear. What they didn't have was the ability for the user to have control over what they saw, and how they experienced the game.

One intriguing result of the add-ons was how they began to influence the game itself. As more dungeons were created, more encounters were not only made easier by the add-ons, but seemed to require it. One popular modification was Decursive. When a person in your group became cursed, certain classes have the ability to remove that curse. Before Decursive, this took constant scanning. With the mod installed, a box would pop up showing the affected character. Click the box, the right spell went off, curing him or her. After Decursive became popular, the dungeon designers at Blizzard started adding in creatures that, instead of sending out curses one at a time, would affect the entire group or raid. Curing them all would be impossible without Decusrive installed. The interface was now not only changing how the user interacted the game, but was changing how that game was further developed. Not only were the humans pushing back, but the machine was responding.

It has taken time for designers and engineers to let go of the idea that they know what the users need most. As our capabilities grew in designing interfaces, studies grew, trying to discern how to capture the attention of the most users. Were animations helpful, or harmful? What colors were best for indicating something was a link? What part of the page is best for getting someone's attention? How can we affect how much a user comes away with? Any time a study tried to answer one of the above answers, the researchers usually came away with an option that was strong, but certainly didn't cover the entire subject pool they had studied.

The recent release of Google personalized web page works off the basis that one answer will not suit everyone. Previous portals, such as Yahoo's portal circa 1998, only allowed a set number of items to be shown, and all had to be on the same page. With Google's portal, users have the ultimate flexibility: they can choose content, placement, and even group items in ways that make sense to them. Users can even create their own custom portlets, then share them for others to use. In my office, most of my coworkers have Google's portal as their homepage, but everyone uses it differently. One groups different news sources on different pages. Another keeps track of blogs and local events. I have weather, comics, and a few news feeds to keep me current. When I was a user of Yahoo's portal, I knew of almost no other users. Now, everyone I know seems to use some variation of Google's homepage.

The cycle of us pushing technology is showing signs in one area: it's encouraging people to become more technical in order to get what they want. While most will never pick up a programming language, more people every year seem to know what an RSS feed is. For those that do know how to program, user communities are expanding for popular products, like bulletin board software or content management systems. Ten years ago, most were computer science graduates, or those that had been in the industry for years. Today, online guides and "Dummies" books let nearly anyone learn to code. Today, communities are made of professionals, but also those who only picked up a book when they wanted their board or CMS to do something, but couldn't find someone else who had done it already.

Indeed, in a few small ways, we're almost coming full circle. I was in one of my clients' offices a few weeks ago. He wasn't the most technical of customers. Though brilliant, he had trouble with his laptop on a daily basis. I was there to find out why syncing was taking him so long.

"Can you bring up your task monitor? Go to the Apple icon--"

He cut me off. "Oh! I found another way you can do that!" He opened up a command line terminal, then pecked out PS -A. He hit enter, and a list of his current processes popped up, complete with how much they were eating at his processor. "Isn't that clever?"

"Boy," I said, "Wait until I show you grep."

Wednesday, May 20, 2009

A la carte development cycles

When people ask what kind of shop we are, I usually answer for the Python group. We're an agile shop in a waterfall environment. However, that isn't true throughout the contract I'm on. Some of us are pure waterfall. Other groups work in two-week iterations. Even my own group sometimes breaks form and goes to a scrum cycle, or do proof of concept phases.

Why are so many people stuck on just doing one type of development cycle?

Ideally, we should be picking the perfect cycle for the project, one that makes the developers happy while not driving the PMs insane, or asking the customer to pay a premium for something that gives the way more paperwork than they thought they needed.

I have a dream where, before a project, we check out the scope and start figuring out exactly how we're going to do this new project, and all the factors involved in it. Here's some typical cases I run across:

Nail-biter

This is a project that has everyone from the top to bottom interested, somehow. CFOs and CEOs and CIOs are walking by now and then to check on progress. We aren't given a black box development environment: instead, we're coding in a plexiglass box with a metal floor that lets them occasionally shock our feet to see how we react. Developers start to iron their shirts. The tech lead stocks up on antacids. The customer demands regular updates.

Out of the Box Experience

We have a new project, and the developers insist their new framework will do everything we need out of the box. Attitudes are smug, and hours estimated are in the double digits. Extra features of the framework are snuck into the requirements, because hey, why not? We can finish this in a weekend, and still have time to go out and get beer! Developers do not iron their shirts, and the tech lead gets uneasy. The customer is sung songs about puppies and sunshine.

My favorite color is blue-- No, yellow!

The customer knows we need something, but we're not clear on what exactly. Feature lists become muddled as we try to work out exactly what this product is supposed to do. Developers become frustrated as no requirements are handed over. The tech lead is constantly in meetings, and looks like she's about to snap. The customer despairs that he may never get his product.

None of the above cases can be suited by one development style. An agile process would flop with a nail-biter as the developers are constantly interrupted, and could very well be too stressed to focus. Agile wouldn't work for the last case either, as the requirements are constantly shifting.

Were I able to suggest a development style for each project, I'd probably go this route:

Nail-biter = Modified Waterfall

Waterfall has its issues, but it does have the effect of making paperwork. Paperwork can be read by uppity ups, or presented to them, or gisted by their assistant. If you do it right, you can keep the developers out of the line of fire until it's time to code, and hopefully by that time, you have it nailed down what you need.

I do hate waterfall (with the fiery passion of a thousand suns), but if you do it right, you can use it in a case like this.

Out of the box = Proof of concepts

Whenever someone tells me that something works 'out of the box', I write up interactions. User goes to this page. User clicks on this. This happens. User selects this option. MAGIC HAPPENS. I then give the developers two weeks to make those interactions work with what I wrote, with the rule that unless I say they go to another page, they stay right where they are.

This helps get the developers a deeper understanding of their new baby, and a more realistic estimate of hours. Frameworks often rely on admin modules that can be fairly hairy and user unfriendly, and getting functions out of those modules can be more work than anyone expected.

Once one set of interactions is done, I move on to the next, passing along interactions until we have enough of an application to start making it pretty and refining it.

Requirement fog = agile

There's always going to be a core set of features. We know that the file drop-box will have to have the capability to upload files. We probably want to delete them. Start there, and while the developers work on that, the customer can pick a few more requirements. Since code is tagged frequently, if a customer decides a new feature isn't for him, we can kill it with a roll-back. Developers are kept busy, and we slowly build a nice, feature-rich product that everyone is happy with.

As I said, it's a bit of a dream, though I'm starting to introduce it into various teams in my sneaky ways. That's how we ninja tech leads roll: leadership through subterfuge!

Why are so many people stuck on just doing one type of development cycle?

Ideally, we should be picking the perfect cycle for the project, one that makes the developers happy while not driving the PMs insane, or asking the customer to pay a premium for something that gives the way more paperwork than they thought they needed.

I have a dream where, before a project, we check out the scope and start figuring out exactly how we're going to do this new project, and all the factors involved in it. Here's some typical cases I run across:

Nail-biter

This is a project that has everyone from the top to bottom interested, somehow. CFOs and CEOs and CIOs are walking by now and then to check on progress. We aren't given a black box development environment: instead, we're coding in a plexiglass box with a metal floor that lets them occasionally shock our feet to see how we react. Developers start to iron their shirts. The tech lead stocks up on antacids. The customer demands regular updates.

Out of the Box Experience

We have a new project, and the developers insist their new framework will do everything we need out of the box. Attitudes are smug, and hours estimated are in the double digits. Extra features of the framework are snuck into the requirements, because hey, why not? We can finish this in a weekend, and still have time to go out and get beer! Developers do not iron their shirts, and the tech lead gets uneasy. The customer is sung songs about puppies and sunshine.

My favorite color is blue-- No, yellow!

The customer knows we need something, but we're not clear on what exactly. Feature lists become muddled as we try to work out exactly what this product is supposed to do. Developers become frustrated as no requirements are handed over. The tech lead is constantly in meetings, and looks like she's about to snap. The customer despairs that he may never get his product.

None of the above cases can be suited by one development style. An agile process would flop with a nail-biter as the developers are constantly interrupted, and could very well be too stressed to focus. Agile wouldn't work for the last case either, as the requirements are constantly shifting.

Were I able to suggest a development style for each project, I'd probably go this route:

Nail-biter = Modified Waterfall

Waterfall has its issues, but it does have the effect of making paperwork. Paperwork can be read by uppity ups, or presented to them, or gisted by their assistant. If you do it right, you can keep the developers out of the line of fire until it's time to code, and hopefully by that time, you have it nailed down what you need.

I do hate waterfall (with the fiery passion of a thousand suns), but if you do it right, you can use it in a case like this.

Out of the box = Proof of concepts

Whenever someone tells me that something works 'out of the box', I write up interactions. User goes to this page. User clicks on this. This happens. User selects this option. MAGIC HAPPENS. I then give the developers two weeks to make those interactions work with what I wrote, with the rule that unless I say they go to another page, they stay right where they are.

This helps get the developers a deeper understanding of their new baby, and a more realistic estimate of hours. Frameworks often rely on admin modules that can be fairly hairy and user unfriendly, and getting functions out of those modules can be more work than anyone expected.

Once one set of interactions is done, I move on to the next, passing along interactions until we have enough of an application to start making it pretty and refining it.

Requirement fog = agile

There's always going to be a core set of features. We know that the file drop-box will have to have the capability to upload files. We probably want to delete them. Start there, and while the developers work on that, the customer can pick a few more requirements. Since code is tagged frequently, if a customer decides a new feature isn't for him, we can kill it with a roll-back. Developers are kept busy, and we slowly build a nice, feature-rich product that everyone is happy with.

As I said, it's a bit of a dream, though I'm starting to introduce it into various teams in my sneaky ways. That's how we ninja tech leads roll: leadership through subterfuge!

Monday, May 18, 2009

One girl + two cups, and some UML

I love UML.

No, I mean I really LOVE UML. The first thing I put on a new laptop is ArgoUML. I first learned it when I was picking up Plone, but since then have found it invaluable for gathering requirements. Give me some ideas about your site, I will eventually be throwing diagrams at you.

When you put UML in front of a customer and a developer, you finally have one thing that not only speaks to both of them, but is useful for both of them. The customer can see how a site might fit together, and the developer can see how to build it without having to sift through the cruft of a design document.

Before you go out and start looking at UML specs, know that for most things you ever want to do, you only ever need to use about 10% of what the standard UML library offers. Actually, 10% might be high. I only ever use one box, three arrows, and the comment box.

There is one problem, however. You have to teach the customer, and sometimes the developer, about how to read UML. The customer often wants to turn UML into a site arch, which will only lead to dozens of duplicate objects. The develop might waste time trying to guess as to what you mean rather than ask what the funny arrows mean. So I resort to my favorite teaching method: the analogy.

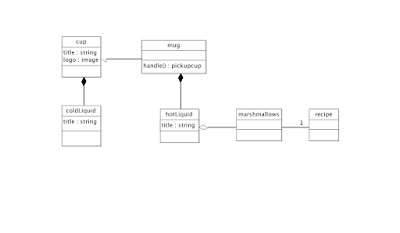

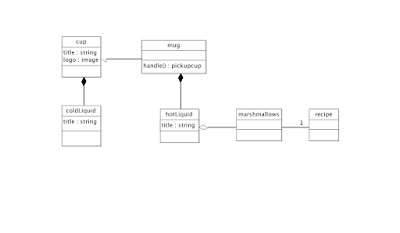

To explain UML, I use cups. In the above UML, you can see that I've made a cup object. It's just your generic cup, the kind the receptionist buys when she's feeling cheap that disintegrates if you leave it with any liquid in it over the weekend. It might even have a Dixie-esque logo, so we make sure we note that on the cup object. We also want to be able to call it something ("Crap-ass cups that Stacy bought, saving a grand total of ten bucks"), so we give it somewhere to hold a title.

In the above UML, you can see that I've made a cup object. It's just your generic cup, the kind the receptionist buys when she's feeling cheap that disintegrates if you leave it with any liquid in it over the weekend. It might even have a Dixie-esque logo, so we make sure we note that on the cup object. We also want to be able to call it something ("Crap-ass cups that Stacy bought, saving a grand total of ten bucks"), so we give it somewhere to hold a title.

We know that this cup is only really good for holding cold liquids, because the last time we tried to make tea in it, we ended up with a burned lap, scorched fingers, and a ruined weekend. Knowing that, we know to very specifically make sure that only cold liquids can go into the cup. The black diamond? If you want to get technical, you say the cup can be comprised of cold liquid. However, I don't usually break out my two dollar words when talking about stuff being in other stuff, so I just say contains. The cup can contain cold liquids.

But what if we want something that holds hot liquids? Do we make a new object? Nah. We're lazy developers, so we'll just use something that's laying around, like our good old cup object. We make a new object called mug, and set it to inherit from the cup class with a white arrow. Now, mug can do everything that cup can: have a logo, and hold cold liquids. It's not very useful to just have an identical class, though, so we need to start modifying mug.

I gave it a handle, since I hate picking up hot cups (I'm a delicate flower, after all). I also made sure to indicate that mugs can contain hot liquids. Now, mugs can hold hot or cold liquids, which pretty much covers the office gambit. Thinking about mugs and hot liquids, I started thinking about my favorite drink: cocoa. Well, marshmallows can go in cocoa, but marshmallows aren't a hot or cold liquid: they're solid awesome. So I use a different arrow: a white diamond. This means that the marshmallows can be contained in anything, but really, the best place for them is probably here. That way, I can have a cup of marshmallows in my generic cup sans cocoa, should that please me.

Thinking about marshmallows, I start thinking about s'mores. It's possible, now that I'm adding all this foodstuffs to my UML that I could think about a recipe database (something any food lover who gets hit with SQL tries to make at some point). Well, I don't need a recipe to contain food. I just need it to be able to point at existing food. So I draw a line, and that shows that these two objects now can be associated somehow.

It's silly, but it usually gets the idea across, and is a hell of a lot cheaper than most UML manuals out there.

No, I mean I really LOVE UML. The first thing I put on a new laptop is ArgoUML. I first learned it when I was picking up Plone, but since then have found it invaluable for gathering requirements. Give me some ideas about your site, I will eventually be throwing diagrams at you.

When you put UML in front of a customer and a developer, you finally have one thing that not only speaks to both of them, but is useful for both of them. The customer can see how a site might fit together, and the developer can see how to build it without having to sift through the cruft of a design document.

Before you go out and start looking at UML specs, know that for most things you ever want to do, you only ever need to use about 10% of what the standard UML library offers. Actually, 10% might be high. I only ever use one box, three arrows, and the comment box.

There is one problem, however. You have to teach the customer, and sometimes the developer, about how to read UML. The customer often wants to turn UML into a site arch, which will only lead to dozens of duplicate objects. The develop might waste time trying to guess as to what you mean rather than ask what the funny arrows mean. So I resort to my favorite teaching method: the analogy.

To explain UML, I use cups.

In the above UML, you can see that I've made a cup object. It's just your generic cup, the kind the receptionist buys when she's feeling cheap that disintegrates if you leave it with any liquid in it over the weekend. It might even have a Dixie-esque logo, so we make sure we note that on the cup object. We also want to be able to call it something ("Crap-ass cups that Stacy bought, saving a grand total of ten bucks"), so we give it somewhere to hold a title.

In the above UML, you can see that I've made a cup object. It's just your generic cup, the kind the receptionist buys when she's feeling cheap that disintegrates if you leave it with any liquid in it over the weekend. It might even have a Dixie-esque logo, so we make sure we note that on the cup object. We also want to be able to call it something ("Crap-ass cups that Stacy bought, saving a grand total of ten bucks"), so we give it somewhere to hold a title.We know that this cup is only really good for holding cold liquids, because the last time we tried to make tea in it, we ended up with a burned lap, scorched fingers, and a ruined weekend. Knowing that, we know to very specifically make sure that only cold liquids can go into the cup. The black diamond? If you want to get technical, you say the cup can be comprised of cold liquid. However, I don't usually break out my two dollar words when talking about stuff being in other stuff, so I just say contains. The cup can contain cold liquids.

But what if we want something that holds hot liquids? Do we make a new object? Nah. We're lazy developers, so we'll just use something that's laying around, like our good old cup object. We make a new object called mug, and set it to inherit from the cup class with a white arrow. Now, mug can do everything that cup can: have a logo, and hold cold liquids. It's not very useful to just have an identical class, though, so we need to start modifying mug.

I gave it a handle, since I hate picking up hot cups (I'm a delicate flower, after all). I also made sure to indicate that mugs can contain hot liquids. Now, mugs can hold hot or cold liquids, which pretty much covers the office gambit. Thinking about mugs and hot liquids, I started thinking about my favorite drink: cocoa. Well, marshmallows can go in cocoa, but marshmallows aren't a hot or cold liquid: they're solid awesome. So I use a different arrow: a white diamond. This means that the marshmallows can be contained in anything, but really, the best place for them is probably here. That way, I can have a cup of marshmallows in my generic cup sans cocoa, should that please me.

Thinking about marshmallows, I start thinking about s'mores. It's possible, now that I'm adding all this foodstuffs to my UML that I could think about a recipe database (something any food lover who gets hit with SQL tries to make at some point). Well, I don't need a recipe to contain food. I just need it to be able to point at existing food. So I draw a line, and that shows that these two objects now can be associated somehow.

It's silly, but it usually gets the idea across, and is a hell of a lot cheaper than most UML manuals out there.

Friday, May 15, 2009

import geek

Okay, I've given you three weeks to get a girl, and even gave you a guide extolling your virtues that you could hand out. Everyone have a girl by now?

Good.

There's one problem, though. Some of those ladies you've procured? They're not geeks. They're not even close. They go to the mall to shop rather than NewEgg, like restaurants where you get beaten up if you don't wear a tie (wait, those aren't just for funerals?), and like chicklit and movies that make them cry and you die a little inside.

Crap.

Do not fear, however! There is hope! You can make that woman a geek!

Start off slow

You can't dunk a woman into a vat of geekness and expect her to come out debating the differences between Perl and Python and how you could be overclock your machine. She will come out like a cat you've dunked in cold bathwater. It will only be amusing for the person watching it on YouTube later.

So you have to start her off slow, building her tolerance (and eventually fostering a love) for what you like. (No guarantees about the overclocking thing, though)

Movies

Movies are a great place to start. There's some awesome movies out there that geeks love, and yet are super friendly to non-geek women. Hell, some of them are practically date movies! Start off with Labyrinth or Princess Bride, or Spaceballs if she likes humor. From those, you can start nudging her towards LOTR trilogy or the original Star Wars movies.

Warning: show her the newest Star Wars trilogy, and you will ruin all your efforts to convert her. Either she'll hate them and think the rest of the genre is like those abominations, or she'll love them, and we'll be obliged to exile her to Siberia.

TV

There's nothing more a geek likes more than a good series. You can draw lines between us, based on what series we like the most. Trek? BSG? Doctor Who? Bab 5?

You can't just start your new flame with Deep Space Nine or Battlestar Galactica, though. All that heavy death and complex background? Sure-fire turn off. For this, I point to one of the least liked Star Trek Series there is: Voyager.

Yeah, it was pretty lame most of the time, but look at the elements: very little back story, tons of romance, goofy story lines, and a female commander with a hot second-in-command. Get her hooked, then move her on to the more hardcore stuff.

Games

While the number of women who game are growing, their numbers are still not so high that you may have been able to snag a woman who likes video games. Many probably had a NES or SNES growing up, but eschewed it as the stuff of youth once they started eying boys.

Sigh. Had they only known the aphrodisiac qualities of a woman who can rattle off the lives cheat to Contra.

At any rate, you want to reintroduce (or introduce, if she was terribly sheltered) her to gaming slowly. Tossing a 100+ hour RPG or a blood soaked shooter isn't going to win her over. Throwing a 360 controller at her with (/me thinks) two directional joysticks, one directional pad, four buttons, two alternate buttons, a power button and four triggers is going to annoy her. A computer game where you have to reference a keyboard schematic in order to play is going to annoy anyone. The best machine to get her into the game: the Nintendo DS. One directional pad. Four buttons. Two triggers you almost never use. And about any game you'd ever want to play.

And it totally fits in your purse. \o/

I have seen people who swore they would never game pick up a DS and play a few rounds of Super Mario or Sudoku. I talk to women on the train all the time who demanded their own DS after seeing their boyfriend's and picking it up out of boredom. Some just do the usual puzzle games, but I know a few that have moved onto rescuing the princess and slaying the zombie hordes.

Ah, the rituals of courtship

When courting, throw in things that show how being a geek is an advantage, rather than something to overcome or hide.

Step one: Cook.

Don't give me that look. You are smart. You can make spiraling towers of ones and zeros bend the reality within a computer to your will. You know languages by the handful, can rattle off the specs of a computer you had when you were eleven, and use text editors that require manuals. You can make your girl a dinner.

The trick is showing her how your way of cooking dinner is superior to the general way of cooking (which involves using mom's cookbooks that she no longer wanted). Geek cooking is totally different. It's finicky yet creative. Alton Brown is a geek. He believes in putting some effort out there to make your dish wonderful, and that precision is worth the attention.

There's even a site dedicated to cooking like an engineer. How better to show that being a bit of a geek is quite useful?

There's also the other stuff of courtship. Put your smarts to use to do other things, like make her cool things. My favorite geek story comes from Three Panel Soul, wherein he wrote a cute game in order to propose to his then girlfriend.

She accepted ;) A testament that you can seal the deal with enough cute geekery.

Good.

There's one problem, though. Some of those ladies you've procured? They're not geeks. They're not even close. They go to the mall to shop rather than NewEgg, like restaurants where you get beaten up if you don't wear a tie (wait, those aren't just for funerals?), and like chicklit and movies that make them cry and you die a little inside.

Crap.

Do not fear, however! There is hope! You can make that woman a geek!

Start off slow

You can't dunk a woman into a vat of geekness and expect her to come out debating the differences between Perl and Python and how you could be overclock your machine. She will come out like a cat you've dunked in cold bathwater. It will only be amusing for the person watching it on YouTube later.

So you have to start her off slow, building her tolerance (and eventually fostering a love) for what you like. (No guarantees about the overclocking thing, though)

Movies

Movies are a great place to start. There's some awesome movies out there that geeks love, and yet are super friendly to non-geek women. Hell, some of them are practically date movies! Start off with Labyrinth or Princess Bride, or Spaceballs if she likes humor. From those, you can start nudging her towards LOTR trilogy or the original Star Wars movies.

Warning: show her the newest Star Wars trilogy, and you will ruin all your efforts to convert her. Either she'll hate them and think the rest of the genre is like those abominations, or she'll love them, and we'll be obliged to exile her to Siberia.

TV

There's nothing more a geek likes more than a good series. You can draw lines between us, based on what series we like the most. Trek? BSG? Doctor Who? Bab 5?

You can't just start your new flame with Deep Space Nine or Battlestar Galactica, though. All that heavy death and complex background? Sure-fire turn off. For this, I point to one of the least liked Star Trek Series there is: Voyager.

Yeah, it was pretty lame most of the time, but look at the elements: very little back story, tons of romance, goofy story lines, and a female commander with a hot second-in-command. Get her hooked, then move her on to the more hardcore stuff.

Games

While the number of women who game are growing, their numbers are still not so high that you may have been able to snag a woman who likes video games. Many probably had a NES or SNES growing up, but eschewed it as the stuff of youth once they started eying boys.

Sigh. Had they only known the aphrodisiac qualities of a woman who can rattle off the lives cheat to Contra.

At any rate, you want to reintroduce (or introduce, if she was terribly sheltered) her to gaming slowly. Tossing a 100+ hour RPG or a blood soaked shooter isn't going to win her over. Throwing a 360 controller at her with (/me thinks) two directional joysticks, one directional pad, four buttons, two alternate buttons, a power button and four triggers is going to annoy her. A computer game where you have to reference a keyboard schematic in order to play is going to annoy anyone. The best machine to get her into the game: the Nintendo DS. One directional pad. Four buttons. Two triggers you almost never use. And about any game you'd ever want to play.

And it totally fits in your purse. \o/

I have seen people who swore they would never game pick up a DS and play a few rounds of Super Mario or Sudoku. I talk to women on the train all the time who demanded their own DS after seeing their boyfriend's and picking it up out of boredom. Some just do the usual puzzle games, but I know a few that have moved onto rescuing the princess and slaying the zombie hordes.

Ah, the rituals of courtship

When courting, throw in things that show how being a geek is an advantage, rather than something to overcome or hide.

Step one: Cook.

Don't give me that look. You are smart. You can make spiraling towers of ones and zeros bend the reality within a computer to your will. You know languages by the handful, can rattle off the specs of a computer you had when you were eleven, and use text editors that require manuals. You can make your girl a dinner.

The trick is showing her how your way of cooking dinner is superior to the general way of cooking (which involves using mom's cookbooks that she no longer wanted). Geek cooking is totally different. It's finicky yet creative. Alton Brown is a geek. He believes in putting some effort out there to make your dish wonderful, and that precision is worth the attention.

There's even a site dedicated to cooking like an engineer. How better to show that being a bit of a geek is quite useful?

There's also the other stuff of courtship. Put your smarts to use to do other things, like make her cool things. My favorite geek story comes from Three Panel Soul, wherein he wrote a cute game in order to propose to his then girlfriend.

She accepted ;) A testament that you can seal the deal with enough cute geekery.

Wednesday, May 13, 2009

Katie's Awesome Idea

I... had an awesome idea.

I sort of hate it when I have awesome ideas, because they're almost always for something that I simply don't want to do. I have little desire to run my own business. I have little desire to code a certain project. I don't really want to toil over game mechanics. So, usually, the ideas just sort of fester for a bit, then go away.

This time, I have decided to put it out there. Maybe someone, someday, will make this happen. I don't want a dime. I just want one opened by me.

So, the idea. I was down in the Ops center of NASA, bs'ing with our sys admin and the infamous Jim. It was near lunch, but it was the only time we really had to go and bug Jim, so we ended up delaying him eating. I felt bad, and found myself wondering if next time, I shouldn't just schedule the meeting for a nearby restaurant.

Then I realized the flaws with that idea. See, our group had tried it in the past, and there were some serious drawbacks. No place to plug in our laptops. No wifi. We couldn't always get a table that was big enough. No way to diagram ideas without squinting at one person's laptop.

Oh, yeah. And there were other people there, making noise and stuff. Stupid people.

There were benefits, though. The food, in general, was better than what we could have if we just nuked food in the kitchenette. Also, everyone was eating, rather than just a few of people who were prepared, being watched by those who were starving and needing to dash to the McD's down the street. People lingered, rather than bolting the second we had covered what we intended, leading to a wealth of interesting ideas and initiatives.

That's when it occurred to me: we need a restaurant with whiteboards. I voiced my thoughtgasm and was poo-poohed by our sysadmin. "Katie, we need to get you out more."

Undeterred, I thought of what else would make the restaurant awesome. Wifi. Like, good wifi! Not that crap you get at Panera or Cosi that cuts out half the time and can't always take VPN. I want pipes that won't clog when my group all decides to bang on Github.

And I want whiteboards and a wealth of markers. I can't even get markers where I work. The marker gnomes steal half and take them back to their lairs for sniffing and painting each other, and the others are selfishly locked in desk drawers by people who never do anything with the boards anyway.

You know what? I want walls, too. I'd even be willing to put on a surcharge for getting them. That way, we could close the door and go crazy. No worrying about if we're going to disturb the super-sensitive accountant who hasn't learned about the miracle of headphones.

Actually, walls made of whiteboard material would be pretty hot.

I want a hookup to a big display, and a wealth of connectors. Knowing that I don't have to find the weird connector for my new Macbook (Screw you, Apple) is well worth ordering an appetizer.

Give me a kiosk to buy things like pens and pencils and pads of paper, because if there's anything I always forget, it's stuff to write on. And sometimes, paper just works better.

The food doesn't even have to be foodie awesome. I'll accept food that's simply yummy and can keep everyone satisfied. Can you make the raw food vegan and the meatiarian happy? Good.

Also, beer wouldn't hurt. Because you know, there's some requirement meetings that you can't get through without some help.

I sort of hate it when I have awesome ideas, because they're almost always for something that I simply don't want to do. I have little desire to run my own business. I have little desire to code a certain project. I don't really want to toil over game mechanics. So, usually, the ideas just sort of fester for a bit, then go away.

This time, I have decided to put it out there. Maybe someone, someday, will make this happen. I don't want a dime. I just want one opened by me.

So, the idea. I was down in the Ops center of NASA, bs'ing with our sys admin and the infamous Jim. It was near lunch, but it was the only time we really had to go and bug Jim, so we ended up delaying him eating. I felt bad, and found myself wondering if next time, I shouldn't just schedule the meeting for a nearby restaurant.

Then I realized the flaws with that idea. See, our group had tried it in the past, and there were some serious drawbacks. No place to plug in our laptops. No wifi. We couldn't always get a table that was big enough. No way to diagram ideas without squinting at one person's laptop.

Oh, yeah. And there were other people there, making noise and stuff. Stupid people.

There were benefits, though. The food, in general, was better than what we could have if we just nuked food in the kitchenette. Also, everyone was eating, rather than just a few of people who were prepared, being watched by those who were starving and needing to dash to the McD's down the street. People lingered, rather than bolting the second we had covered what we intended, leading to a wealth of interesting ideas and initiatives.

That's when it occurred to me: we need a restaurant with whiteboards. I voiced my thoughtgasm and was poo-poohed by our sysadmin. "Katie, we need to get you out more."

Undeterred, I thought of what else would make the restaurant awesome. Wifi. Like, good wifi! Not that crap you get at Panera or Cosi that cuts out half the time and can't always take VPN. I want pipes that won't clog when my group all decides to bang on Github.

And I want whiteboards and a wealth of markers. I can't even get markers where I work. The marker gnomes steal half and take them back to their lairs for sniffing and painting each other, and the others are selfishly locked in desk drawers by people who never do anything with the boards anyway.

You know what? I want walls, too. I'd even be willing to put on a surcharge for getting them. That way, we could close the door and go crazy. No worrying about if we're going to disturb the super-sensitive accountant who hasn't learned about the miracle of headphones.

Actually, walls made of whiteboard material would be pretty hot.

I want a hookup to a big display, and a wealth of connectors. Knowing that I don't have to find the weird connector for my new Macbook (Screw you, Apple) is well worth ordering an appetizer.

Give me a kiosk to buy things like pens and pencils and pads of paper, because if there's anything I always forget, it's stuff to write on. And sometimes, paper just works better.

The food doesn't even have to be foodie awesome. I'll accept food that's simply yummy and can keep everyone satisfied. Can you make the raw food vegan and the meatiarian happy? Good.

Also, beer wouldn't hurt. Because you know, there's some requirement meetings that you can't get through without some help.

Subscribe to:

Comments (Atom)